Concrete Abstract Takeaways

Four positive things I learned in our class on Machine Learning Evaluation

In class last Thursday, Deb challenged me to come up with positive takeaways from our evaluation class. It’s funny because I only have positive takeaways! But then I realized that I’m the weirdo, and have an odd notion of what people should get out of computer science classes. Let me explain myself and my four main positive takeaways.

In Computer Science, a positive takeaway almost always means a technological solution. Computer Science courses tend to be prescriptive rather than analytic. We’re a field built on algorithms after all. Each CS class gives a list of problems and a list of solutions. Computer scientists love to present fields as libraries of conditional statements: “if this, then do that.” It’s then on you to import the appropriate libraries and stitch them together in your Jupyter Notebook.

However, I’m not a computer scientist.1 I just hide out in a computer science department. I’m ok with classes where the goal is just to get you thinking about what we do. I’m not one for telling you what you should do.

That means my positive takeaways from this class were never going to be of the form “Use cosine learning rates and a proper scoring rule and never report ROCAUC.” I don’t believe in a program that guarantees prediction should work. There is and cannot be a dogmatic meta-science of machine learning evaluation. And let me tell you, you don’t want dogmatic meta-science anyway! Ritualized metascience just leads to a slew of mediocre papers, not to an actual acceleration of “good science.”

The main takeaway from this class is “hey, machine learning evaluation is rich and complicated.” I also have some concrete, specific takeaways, each of which requires its own blog post. Let me list a few here now, and I’ll get to them over the next few weeks.

Engineering Studies: The syllabus opens with the question, “What does it mean for machine learning to work?” I asked that question because I was interested in a broader question: what does it mean for any engineering system to work? I have written this here before, but I now have a satisfactory answer to expound upon: a system works if it doesn’t yet not work.

We create a system of checks and metrics, find a system that matches these, and run with it until we encounter an exception. When we encounter such an exception, we figure out which part of our design made a wrong assumption and patch the design. By keeping a tally of what has worked in the past, this design process becomes faster in the future.

Max Raginsky and I have been going back and forth for a year about what a “Lakatosian Defense” of engineering might look like. This class got me a step closer to us writing that down explicitly.2

Metrical Determinism: OK, maybe that first one is too philosophical for you. Here’s something more concrete. Before this class, I don’t think I appreciated the extent to which cost functions dictate algorithms. Specifying the evaluation metric dictates your prediction algorithms and predictive policy. This is much more subtle than I had appreciated, and you can see its implication throughout prediction systems. Metrical determinism was most clear in the discussion of scoring rules and coherence. Similarly, if you post-hoc change your metric, you can completely revise how you view predictions, and can see whatever you want to see. Too many “debunking” papers in machine learning conferences are just artifacts of how metrics dictate what “working” means. I’ll write more about this, but I think this does provide guidelines for practice.

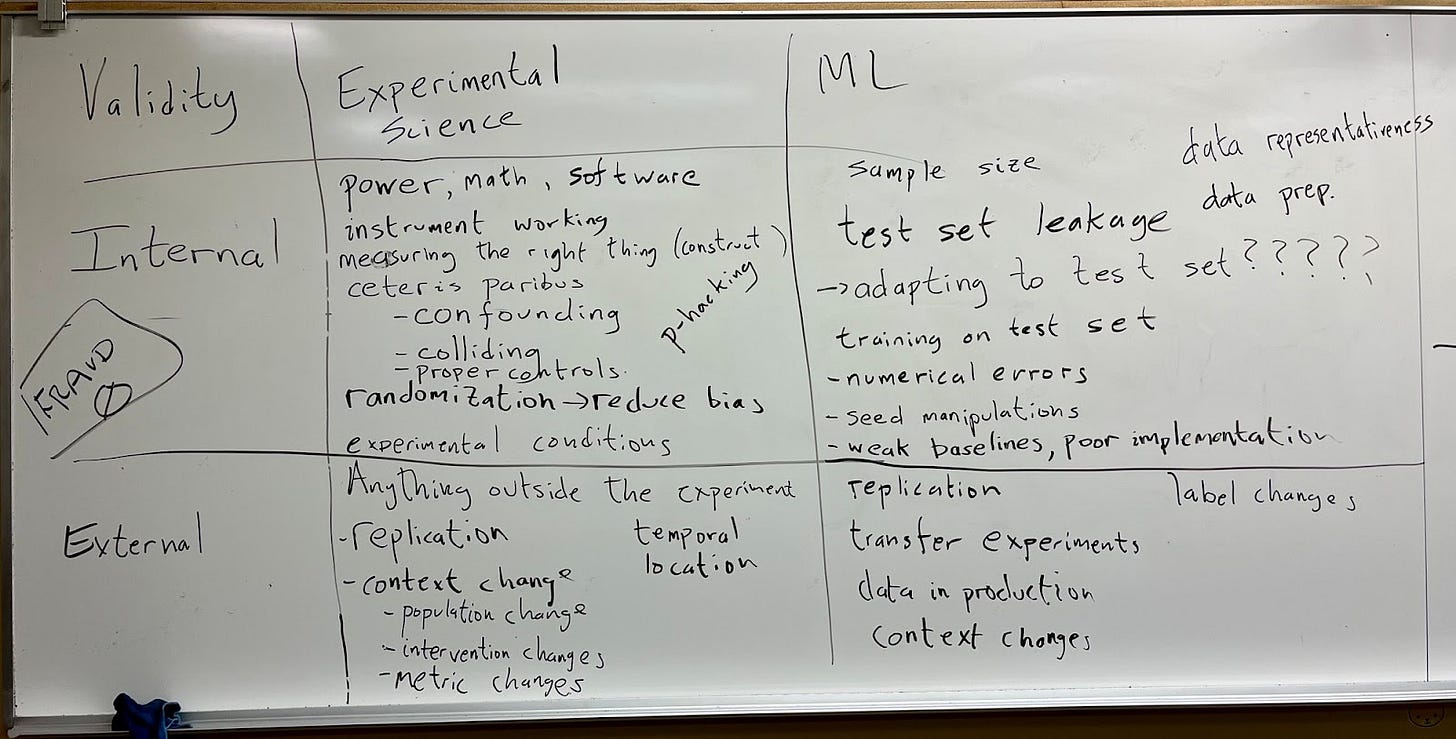

Validity. Here’s one where Deb and I are constantly in disagreement. Deb wrote last week that she is still unsatisfied about how to think about validity in prediction systems. I actually think we hammered out a robust parallelism between the sociopsychological experimental discourse and pure predictive engineering. In class, we brainstormed topics from internal and external validity in both experimental science and machine learning. Here’s the whiteboard from that class, which I think illustrates a clean taxonomy:

Internal validity in prediction systems encompasses everything that goes into preparing a benchmark. This can be the sample size, the data representativeness, leakage of information between the test and train, etc. External validity refers to anything that happens after the benchmark is published, including replication of the benchmark, experiments on the transfer of predictions to other contexts, or using the predictor in production. Construct validity is the specification of what features and labels mean. I’ll write more about this and try to convince Deb to respond with a guest post.

Future Pedagogy: Finally, I’ve mentioned this before, but this class helped me conceive of another class I’d like to teach at some point: An undergraduate introduction to machine learning grounded in the holdout method. Due to department politics and undergraduate demand, I don’t know when and where I’ll get the opportunity to teach such a class. And maybe by the time I do get that opportunity, the machine learning world will look completely different, with AGI having arrived and humans relegated to being batteries. But I like this course outline, which, for me, is shockingly prescriptive.

Thanks to the kind mentorship and advocacy of Steve Wright, my first professorship was in the CS department at UW Madison. I was confused because I had never identified myself as a computer scientist, nor had any idea what computer science was. Amos Ron set me straight: “Computer science is whatever computer scientists are doing today.” This remains a valid definition.

Note to Max: we’re on the hook to finish this now.

"Note to Max: we’re on the hook to finish this now."

It's happening!

I’ve really enjoyed your series of posts about this class. For the past few years I’ve helped teach a summer workshop on computer vision methods for ecology (https://cv4ecology.caltech.edu/). I’d say we probably spend >50% of the time on evaluation. I think students are surprised to learn that actually training the ML models is the boring part; the interesting challenge is evaluation — how do you construct data splits and choose metrics that are meaningful for the actual scientific question being asked? Anyway, I’m very excited to see your class being taught and am really looking forward to digging into the course materials. Thanks for sharing!