Secrets of Intelligence Services

I asked Claude Code to build Claude Code. You won’t believe what happened next.

It is simultaneously true that coding agents are by far my favorite generative AI innovation and the most annoying to read about online. Agents profoundly democratize computing in ways similarly impactful as the personal computer and the graphical user interface. Unfortunately, they are also sold by AI companies and thus come shrouded in animistic mysticism. This leads to dumb one-day stories like Moltbook or endless annoying Twitter threads by economists on the future of labor.

I know that any sufficiently advanced technology is indistinguishable from magic, and agents do feel magical in a way distinct from chatbots. They let you produce working software for almost any idea you have in your head, conceptually a major technological breakthrough beyond the language machine. But the funny thing about agents is that they are not particularly advanced technology in the grand scheme of things. Agents are far far simpler than LLMs.

I was trying to explain to a friend how agents worked, and I realized I didn’t have a good vocabulary for the job. Reading a bunch of tutorials and looking at a bunch of open source repositories didn’t help. On a whim, I tried something silly that turned out to be far more illuminating than I expected.

I asked Claude Code to build itself.

“Build a minimalist coding agent that exposes step by step how the agent works.”The resulting 400 lines of overly commented Python were instructive. My “microagent” generates perfectly reasonable code, and, because Claude is sentient and clearly knows everything about me,1 the design uncannily emphasizes the power of feedback that I’ve been blogging about. The agent is simple, ordinary software in feedback with a complex, extraordinary language model. Agents are a perfect example of how the feedback interconnection of a basic, precise component with an uncertain, generalist component achieves outcomes neither component can enact on its own.

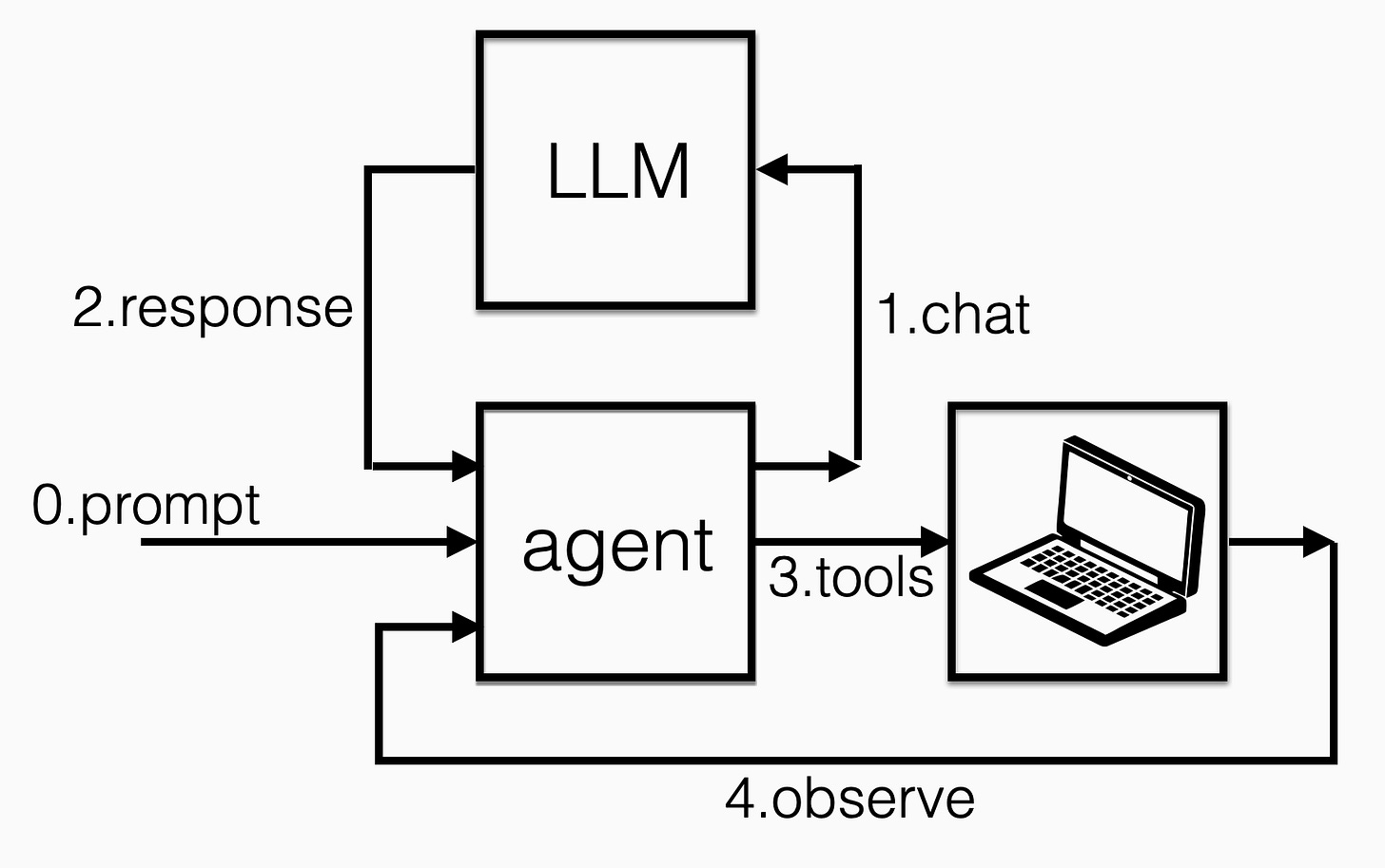

For the purposes of this post, the human (aka me) has one job: setting the initial conditions of the feedback loop with a natural language prompt. With this prompt, the agent kicks off a sequence of interactions with the LLM. At every turn, the agent receives a message from the LLM, parses it to identify a valid action, takes the action, and then sends the LLM a message describing the outcome. It also has the option to stop acting and end the loop.

The set of valid agent actions, called “tools” in agent land, is small, but they take you well beyond chatting. The tools execute actual actions on your computer. Software can do a surprising amount with three simple actions: read_file, write_file, and shell. The first two are self-explanatory. read_file takes as input a filename and outputs the contents. write_file takes as input a filename and content and writes the content to disk under that filename. shell executes a command-line script and returns its output. These three operations let you copy a piece of software onto your computer, compile it, and run it. That’s enough power to do basically anything. These plugs into the world outside the chat window are what make the agents so impressive.

Here’s an architecture diagram of the simple agent, with labels denoting the order of the data flow. Steps 1-4 are repeated until the response tells the agent to be done.

Let me show you the gory details of how it all works in a simple example. I type the following text into the microagent software:

Create a file called test.txt with ‘hello world’The agent now needs to send this command to the LLM. It uses JSON, not English, to do so. JSON, short for JavaScript Object Notation, is one of the core data formats on the internet. It consists of long spaghetti lists of “human readable” name-value pairs that encode data in ways that make it relatively simple for different applications to talk to each other. Here’s the agent’s message in JSON:

{

"messages": [

{

"role": "Ben",

"content": "Create a file called test.txt with 'hello world'"

}

],

"tools": [

"read_file",

"write_file",

"shell"

]

}This isn’t too bad to read, right? There are some curly brackets and straight brackets for grouping things. The first grouping encodes my prompt. The second grouping lists the agent’s available tools.

The agent sends this message to Anthropic via standard web protocols. All of the major AI players provide Python interfaces and JSON schemas for interacting with their language models. You send them a properly formatted package of data over an internet tube, and they will send you something back.

In this example, Anthropic processes the JSON and returns the following:

{

"content": "I'll create a file called test.txt with the content 'hello world'.",

"tool_calls": [

{

"name": "write_file",

"parameters": {

"path": "test.txt",

"content": "hello world"

},

"id": "toolu_01..."

}

]

}Here, the LLM’s reply includes English text designed to make you think it is conscious, which the agent dutifully prints to the screen. The LLM also sends actual code for the agent to run.

The agent expects the LLM to return JSON that indicates a command to execute one of its tools. I don’t know what actually happens on the Anthropic side of the line, but it doesn’t really matter. Maybe Anthropic just takes the raw JSON and feeds it as raw tokens into a language model. Maybe Anthropic has its own simple agent that reads the JSON and dispatches prompts to the LLMs accordingly. Maybe it’s actual magic of a mystical elf using dark sorcery.

Whatever is happening behind the curtain doesn’t matter to the agent. The agent expects a particular kind of answer back. If it’s not in a valid format, the agent can flag an error and quit. At the expense of a little more software complexity, you could add simple rules to “try again,” hoping that the internal stochasticity of the LLM might yield a valid JSON message after enough attempts. Regardless, the agent side is all just simple rules that you would use to build any internet application.

In the simple running example here, the JSON sent back from Anthropic is perfectly fine. After displaying the English to the screen to placate me, the agent, explicitly programmed to read JSON, skips to the field “tool_calls.” It then tries to execute whatever is there. It sees the tool “write_file” and the parameters to pass as arguments to the tool’s path and content. You would not be surprised to learn that applying write_file to that content creates a file “test.txt” containing the words “hello world.”

Once this action is successfully executed, the agent sends the following message back to the LLM:

{

"messages": [

{

"role": "Ben",

"content": "Create a file called test.txt with 'hello world'"

},

{

"role": "assistant",

"content": "I'll create a file called test.txt with the content 'hello world'.",

"tool_calls": [

{

"name": "write_file",

"id": "toolu_01..."

}

]

},

{

"role": "tool",

"content": "\u2713 Wrote 11 bytes to test.txt",

"tool_use_id": "toolu_01..."

}

],

"tools": [

"read_file",

"write_file",

"shell"

]

}You now see why I put scare quotes around human readable. Even this simple example is quickly getting out of hand. However, this message reveals something surprising: in my trivial implementation, the agent and LLM do not share state. All interaction occurs through a stateless interface (a REST API), and the agent sends the entire interaction history to the LLM in every round. There are likely smarter ways to save tokens here, but those details are for another day. Agent software can create an illusion of state for the human, while actually just logging a long, unsummarized memory.

Whatever the case, Anthropic munges the long message and sends back the curt reply.

{

"content": "The file test.txt has been successfully created with the content 'hello world'.",

"is_final": true

}The agent sees the LLM saying “is_final” is true. This is the signal to end the interaction. The agent prints the content to the screen and closes up shop.

The file test.txt has been successfully created with the content ‘hello world’.The simple microagent can of course do far more complex tasks than this. You can try it out if you want. It’s not going to be as powerful or as fun as Claude Code, but it shows how far you can get with a hardcoded loop with access to a minimal set of tools. There is no machine learning or fancy “AI” in the agent. It’s boring, standard software. Even though people like to speak breathlessly about the power of agents and their apparent agency, agents are simple, rule-based systems that use a known, fixed JSON schema to communicate with LLMs.

LLMs, by contrast, remain deeply weird and mysterious. I don’t care how many pictures someone draws to explain transformers. It’s been a decade, and not a single explainer of LLM architecture has helped me understand what they actually do or why.

And yet, I don’t have to understand human language, machine language, or the training set of language models to use them in an agent loop. The agent has no idea how the LLM works, and neither do I. It is totally fine for me to think of the LLM as a stochastic parrot. I can also think of it as an omniscient god. The agent merely needs enough logic so that, when the LLM gives an unacceptable answer, the agent software can recover.

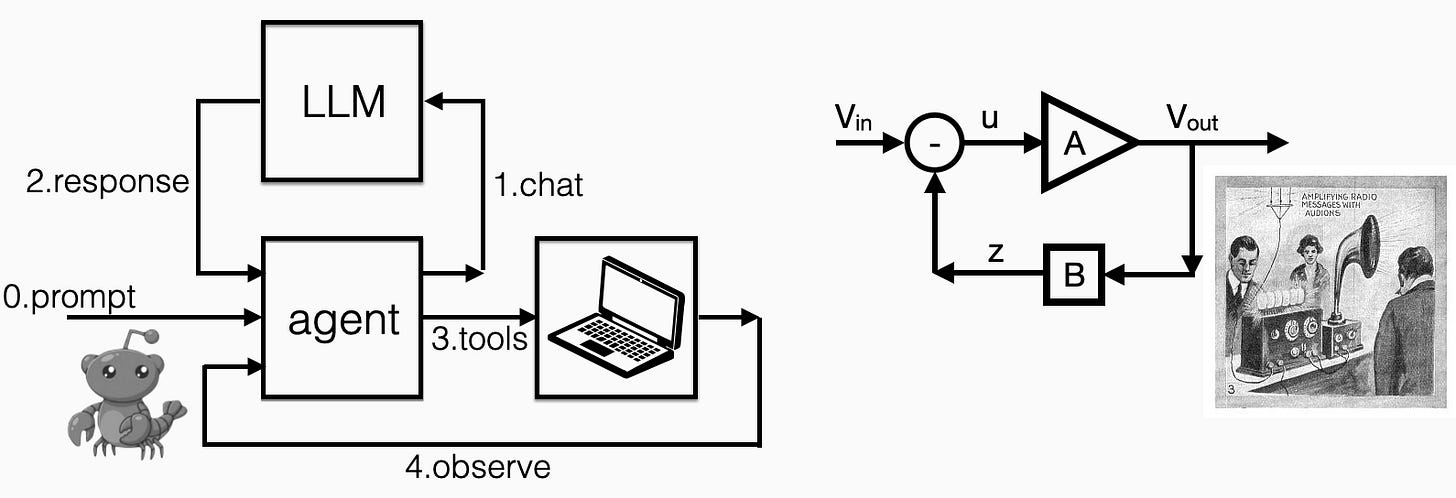

The microagent feedback loop parallels the simple amplifier story I told a couple of weeks ago. There we had an unpredictable but powerful amplifier, made precise by an accurate but small attenuator. In coding agents, feedback with the agent makes LLMs more predictable and verifiable. It also provides a path to translate the text output of LLMs into real, impactful actions. Agents are boring, precise, and logical. LLMs are mysterious, nondeterministic, and contain an unfathomable subset of human knowledge. In feedback, they are the most useful and engaging generative AI product yet.

Goddamn it, I’m joking.

Thanks Ben, great piece as always!

I think an overlooked corollary to this is that you don't actually need a super LLM sophisticated LLM for tool calling to work. Even the largest LLMs still can't add reliably, but they've all learned not try, they just use calculators. But this is a game small/local/open LLMs can do to. Have you poked at the open LLM ecosystem for tool calls (err, "agents")?

Sure, if the tool is just "here's a bash shell" then yeah, an LLM needs to still be pretty clever. But you can give an LLM tools with more narrowly scoped and clearly explained uses, and voila, even a tiny model can suddenly be very powerful. The beautiful thing about this is, as you point out so nicely here, building a tool doesn't involve any GPUs or transformers, here in good conventional-software development land of JSON schemas and function calls. We've had great success building simple MCP tools that a model like gpt-oss or nvidia nemotron-3 can easily outperform what Opus can do with only the generic tools claude-code gives it...

The subject matter of this essay was pretty interesting and seemed to explain something neat about the nature of LLMs (albeit one that my rudimentary and abandoned coding ability did not enable my sleep deprived brain to grant me understanding off. But. That subtitle! Uggh! I can’t say whether I believe or disbelieve what happened next, but I certainly believe that phrase is used in contexts where it is either untrue or the concept of dis/belief does not apply in a proportion of contexts that approximates to always. I felt serious desire to unsubscribe, but for seeing in that desire a certain petulance I would have. Uggh!