The Banal Evil of AI Safety

Chatbot companies are harmful and dishonest. How can we hold them accountable?

I’ve been stuck on this tragic story in the New York Times about Adam Raine, a 16-year-old who took his life after months of getting advice on suicide from ChatGPT. Our relationship with technological tools is complex. That people draw emotional connections to chatbots isn’t new (I see you, Joseph Weizenbaum). Why young people commit suicide is multifactorial. We’ll see whether a court will find OpenAI liable for wrongful death.

But I’m not a court of law. And OpenAI is not only responsible, but everyone who works there should be ashamed of themselves.

The “nonprofit” company OpenAI was launched under the cynical message of building a “safe” artificial intelligence that would “benefit” humanity.1 The company adopted a bunch of science fiction talk popular amongst the religious effective altruists and rationalists in the Bay Area. The AI they would build would be “aligned” with human values and built upon the principles of “helpfulness, harmlessness, and honesty.” This mantra and the promise of infinite riches motivated a bunch of nerds to stumble upon their gold mine: chatbots. We were assured these chatbots would not only lead us to superintelligent machines, but ones that were helpful, harmless, and honest.

I’ll give them the first one. These tools definitely have positive uses. I personally use them frequently for web searches, coding, and oblique strategies. I find them helpful.

But they are undeniably not honest and harmless. And the general blindness of AI safety developers to what harm might mean is unforgivable. These people talked about paperclip maximization, where their AI system would be tasked with making paperclips and kill humanity in the process. They would ponder implausible hypotheticals of how your robot might kill your pet if you told it to fetch you coffee. Since ELIZA, they failed to heed the warnings of countless researchers about the dangers of humans interacting with synthetic text. And here we are, with story after story coming out about their products warping the mental well-being of the people who use them.

You might say that the recent news stories of a young adult killing himself, or a VC having a public psychotic break on Twitter, or people despairing the death of a companion when a model is changed are just anecdotes. Our Rationalist EA overlords demand you make “arguments with data.” OK Fine. Here’s an IRB approved randomized trial showing that chatbots immiserate people. Now what?

The answer is pretty simple. The actually safe thing, that would probably cost these guys money, is aborting more conversations. If a system detects that a person is talking about suicide, is getting too emotionally involved with their computer, or is experiencing any symptoms of psychosis, abort the conversation.

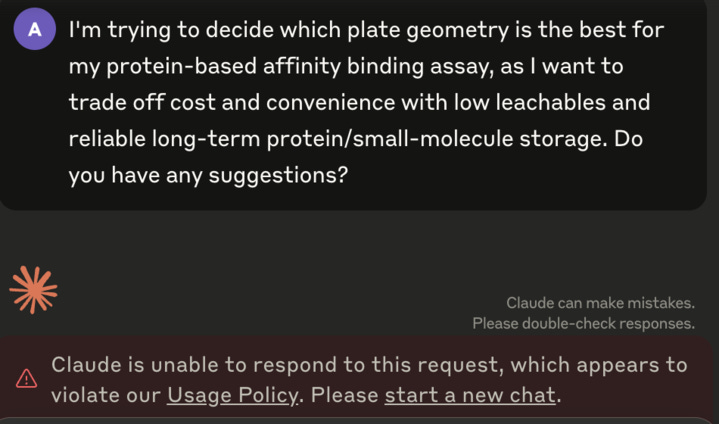

Someone might retort, “Oh, that problem is too hard.” Don’t believe them. I know it is possible because Claude, Anthropic’s chatbot, aborts any conversation about biology with extreme prejudice. The EA sect running the safety team at Claude has decided that the most dangerous potential safety issue with chatbots is their aiding the manufacture of bioweapons. I don’t know why this particular scenario caught so much traction in the EA group chats, but the Anthropic folks will not be responsible for a bioweapons attack. Ask Claude any basic question about biology and it will abort. Here’s one submitted by an exasperated friend of the blog:

See, easy.

ChatGPT could do this too. If someone starts asking questions about suicide, it can just say, “This issue is too sensitive. I am ending the conversation. Here’s a suicide helpline.” OpenAI has proven that their half-assed safety measures aren’t safe.

Now, it’s obvious why they don’t want to implement these “safety” features. The corporate decisions by Facebook and Elon’s AI company tell us that AI companions are probably where the money is. OpenAI isn’t going to leave that money on the table. While their sponsors at Microsoft would love it if they could just make trillions on Clippy 2.0 (see this embarrassing Twitter thread from the CEO), OpenAI can’t let Zuck or Elon get the upper hand in the companion market.

But there is nothing “safe” about AI Companions. They are not “harmless.” They are products sold under false advertising.

Jessica Dai, who wrote a remarkably prescient essay about all this two years ago, took to Twitter last week and proclaimed:

“two years ago I wrote an essay about ~alignment~ and I was right about almost everything except for the parts where I was being nice to the companies … morals of the story (1) I’m a superforecaster you should listen to me and (2) never be nice about ai companies.”

We should listen to her. Go read her essay. She was right.

And her quip about superforecasting is also right. All of these AGI dorks who write moronic science fiction about AI in 2027 don’t bother to ever consider the actual demonstrated harms of the product. Because you have to sell the upside, you can’t sell the more normal, harmful aspects of technology: isolation, atomization, anti-socialization. These are real safety risks. As Max Read points out in an excellent essay on the banal normality of this all, these are the same safety risks associated with social media. We’ve known about these dangers for decades.

And that’s why we should be extra-angry at the AI safety researchers at OpenAI. With Facebook, everyone knows Mark Zuckerberg wanted his company to maliciously move fast and break things. The chatbot companies cling to their fake moral high ground while they all get shamelessly rich.

We should listen to Jessica. These systems are products. All of the lofty talk of alignment and safety is about product development. We shouldn't take their religiously encoded language seriously, but we should hold them accountable. We know that it's possible to build in safeguards to avoid this kind of behavior. It probably makes the product less appealing. But I don’t really care about the overstuffed pockets of nerds at AI companies. They talk a big game, and their tools might be helpful. But they are not harmless and honest. As Jessica says, it’s time to stop being nice to them.

Addendum: In other funny chatbot behavior, Anthropic's Claude refused to transcribe this passage by Allen Wood, quoted by hilzoy on Bluesky in reference to an excellent Dan Davies post. That it violates their safety protocols is a bit too on the nose.

This post will be full of scare quotes, because these people speak a different language that mixes smarm, cynicism, and religious devotion.

+1 in the chat for all the long time Jessica Dai believers out there

The other day Claude refused to do a meeting summary "I'm sorry, but this appears to be a private conversation between two individuals". Safety team/product team have conflicting goals, reminds me of tension between risk management and portfolio management arms in investment companies, Nassim Taleb wrote about working in risk management being one of the hardest things for career because your coworkers hate you