Sounds Like Noise to Me

Computational randomness in the 1920s

I frequently harp upon the difference between natural and intentional randomness. Natural randomness models observation as random, as I described last week in modeling noise in electronics or the distribution of attributes in some field study. Intentional randomness is generated by people, either for games of chance or as a convenient way to battle indecision.

The Roaring Twenties were a heyday for intentional randomness. Neyman and Fisher’s early work on randomized experiments called for randomly assigning crops to agricultural plots. For Neyman, this allocation could be done by selecting balls from an urn with an appropriate color distribution. For Fisher, the random assignments could be accomplished by shuffling cards. The idea that these sorts of selection rules returned random numbers dates back at least to Bernoulli. But were they really random?

Karl Pearson argued that they were decidedly not.1

“Practical experiment has demonstrated that it is impossible to mix the balls or shuffle the tickets between each draw adequately. Even if marbles be replaced by the more manageable beads of commerce their very differences in size and weight are found to tell on the sampling. The dice of commerce are always loaded, however imperceptibly. The records of whist, even those of long experienced players, show how the shuffling is far from perfect, and to get theoretically correct whist returns we must deal the cards without playing them. In short, tickets and cards, balls and beads fail in large scale random sampling tests; it is as difficult to get artificially true random samples as it is to sample effectively a cargo of coal or of barley.”

If statistics were to be made rigorous, statisticians would need to be able to reliably generate random samples. But how? One of the first systematic approaches was compiling large tables of random numbers.

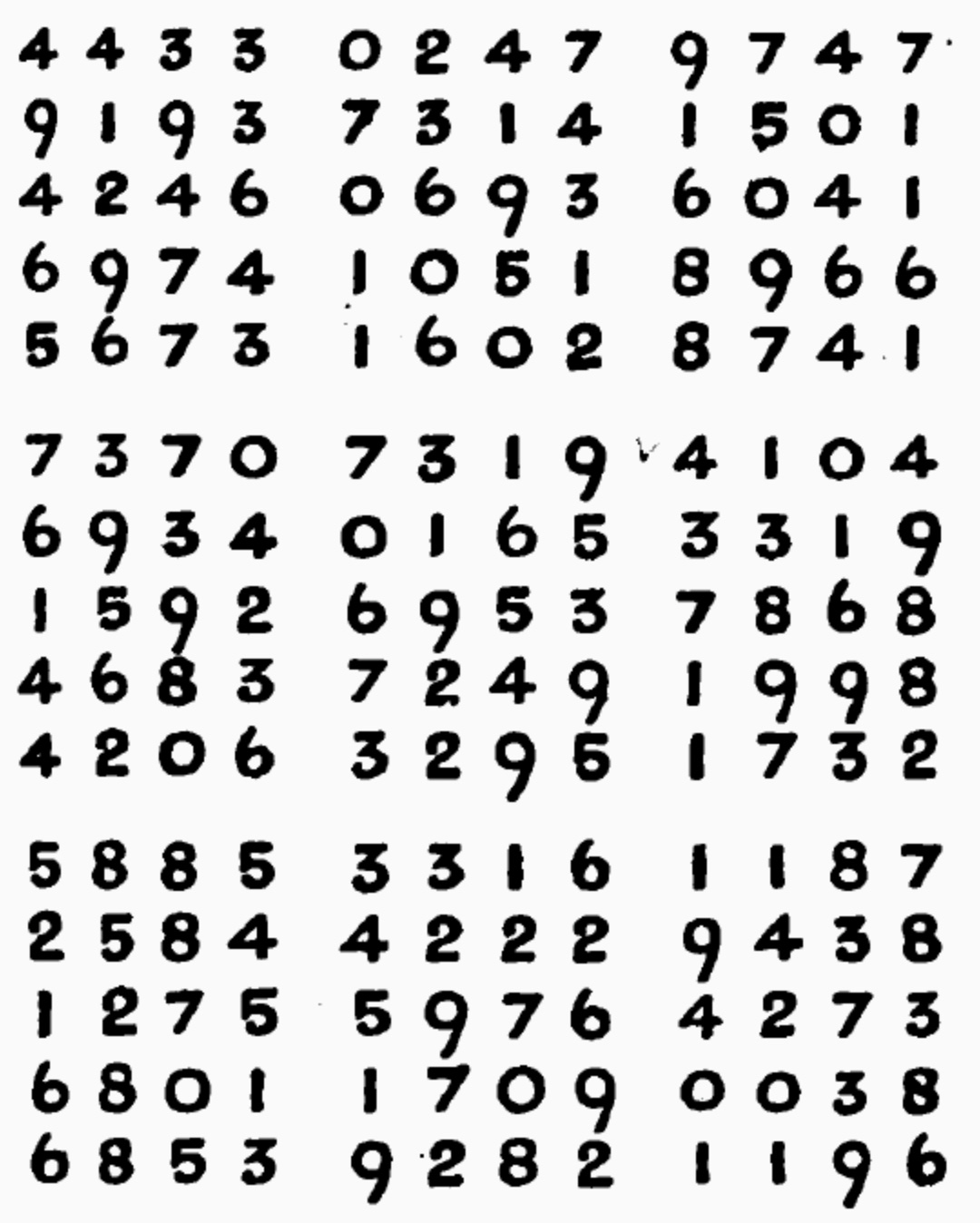

In 1927, Cambridge University Press published a book of Random Sampling Numbers by Leonard Tippett, the 15th volume of their series “Tracts for Computers.” The book consists of 26 pages of numbers, each with a 50x32 table of digits.

Altogether, the book contains 41,600 “random digits.” The book provides no details of how Tippett collected them, simply asserting “40,000 digits were taken at random from census reports.” I don’t know what that means, and no one else seems to know either. The only other information is that Ida McLearn handwrote the tables.

Karl Pearson wrote the foreword to the book, which features the quote I highlighted above. Pearson argues that there are many ways the applied statistician can use these numbers to generate samples. He suggests taking four digit runs as numbers between 0 and 9,999. These runs can be produced by scanning through the book horizontally, vertically, or diagonally, forwards or backwards. Any systematic scan would do.

You can see why statisticians might find such computational methods more appealing than mechanical randomness generators. But would such a sequence be random? How could you tell? Pearson more or less says not to worry about it:

“Any such series may be a random sample, and yet a very improbable sample. We have no reason whatever to believe that Tippett's numbers are such, they have conformed to the mathematical expectation in a variety of cases, and we would suggest to the user who finds a discordance between the results provided by the table and the theory of sampling he has adopted, first to investigate whether his theory is really sound, and if he be certain that it is, only then to question the randomness of the numbers.

“But the reader must remember that in taking hundreds of samples he must expect to find improbable samples occurring or even runs of such samples; they will be in their place in the general distribution of samples, and he must not conclude, from their isolated occurrence, that Tippett’s numbers are not random.”

It’s a bit odd from our modern perspective. You could run all sorts of tests on your samples to see if they behave like random samples. If they pass the tests, then I guess you would be assured that you are generating randomness. But of course, if you spend too much time testing randomness, you run out of numbers in your table. Pearson was almost suggesting that by starting at “random” places in the table and moving in “random directions,” even more randomness could be generated from the 41,600 digits. Claims to this effect were difficult to verify. For Pearson, randomness was an “I know it when I see it” phenomenon.

To be honest, I’m not convinced we have moved far beyond Pearson’s perspective. We’re all guilty of not being particularly diligent about random number generation. Just set the random seed in python to 1234 or 1337 and go with it.

This sort of intentional randomness is good enough until it isn’t. Philip Stark and Kellie Ottoboni showed that many of the random number generators in popular statistical packages were woefully lacking. Up until 2015, STATA used a random number generator that couldn’t randomly sample a permutation of length 13. That seems to be less than ideal. The common MT pseudorandom number generator (used in R and Matlab) can’t generate a random permutation of 2084 items. That’s better than STATA, but is it good enough?

I’ve run into many statisticians who think Stark and Ottoboni’s worries about pseudorandomness are pedantic. But I always ask them which part of statistics they think is not pedantic. Are those 50 page proofs of the asymptotic normality of standard errors not pedantic? What about all of the detailed calculations of the efficiency of maximum likelihood estimators of parameters from distributions we know are hypothetical? Every statistician argues their part of statistics is the one that needs excessive attention. The one thing I’ll give Stark and Ottoboni: they describe several ways to write better pseudorandom number generators if needed.

Regardless of whether the numbers are actually random, Pearson’s idea to simulate randomness with code has been strikingly effective. There are countless randomized algorithms whose predictions match our experience. That probably should be good enough.

At some, I feel like I’ll need to dive into the politics of Fisher and Pearson, who remain two inescapable pillars of statistical thinking and yet held racist views that were extreme even for their time. That we rely so much on artificial concepts from these two remains concerning. So much of what bothers me about statistics and the general flattening of experience into pat answers and chance comes from their work in eugenics.

Von Neumann 1949: "Anyone who considers arithmetical methods of producing random digits is, of course, in a state of sin".

Thank you so much for your amazing article! Apologies if this is a silly question, but what are the main obstacles keeping us from using "true randomness" (insofar as that can be said to exist) in statistical experiments? Stark and Ottoboni showed that PRNG were not particularly good at generating this "true randomness", but from my understanding, there are accessible RNG's based on quantum events or atmospheric noise that would fulfill the desired properties. What is stopping us from using these?

Thank you!