In defense of typing monkeys

We definitely are training on our test sets. This is fine.

I appreciate all of your feedback about why machine learning practice doesn’t seem to adaptively overfit to static test sets. One commenter proposed that Kaggle competitions wouldn’t tolerate people spamming the leaderboard. I agree that active Kaggle competitions wouldn’t accept querying a test set one million times, but on machine learning benchmarks, Google is just fine with it. Here’s a fun paper that evaluated the CIFAR10 test error three hundred thousand times for a single figure. I’m not exaggerating that number.

Since we query the test sets to death, the overwhelming answer to why running on the RL on the test set isn’t a problem seems to be that hyperparameter tuning isn’t powerful enough to overfit. I don’t buy this for so many reasons. First, hyperparameters are just vibes. What are the hyperparameters in a neural network?

The number of units in each layer

The number of layers

The architecture

The regularization

The weight initialization

The ADAM parameters

The input encoding

The length of the hamburger train

The random seed

The bugs in the code

As far as I can tell, the only parts of the neural network that are not parameters are the weights after initialization.1 We can tell ourselves stories that this list of ten things is somehow too weak to overfit. But if you let me run RL on the neural network weights, I can get the test error to zero. Why is it that if we optimize everything else, we don’t get the error to zero?

The paper from Google Research that I cited above that queried the test set 300K times only was messing with the architecture. The research community at large runs a giant reinforcement learning algorithm in parallel. Thousands of teams compare what each other has done in papers and get their leaderboard performances down to zero. In Kaggle competitions, competitors don’t communicate directly, but they all surf the web looking for strategies. On ML benchmarks, everyone tries to replicate everyone else's tricks and find novel innovations to hill climb from there.

And what happens? The test error gets really small over time! It goes to zero. Let’s stick with CIFAR10 for a bit because it’s not only a data set that has been queried to death and has absurdly low test error, but it’s one where my group did a replication study.

Did this massive frenzy of test set optimization adapt to the labels and yield poor out-of-sample behavior? It turns out it didn’t. The models with the best error on the public test set ended up being the best ones on the private test set.

The top model in gold was a huge convolutional neural network. It was one of the most recent models we tested. Not only did it have the lowest error on the CIFAR10 data set (2.9% error), but it had the best test error on the replicated CIFAR10.1 data set. That is, it generalized the best. Community reinforcement learning found a model with low test error and low generalization error. The bias and variance were both minimized.

In 2021, a Google team reported a vision transformer model that achieved 0.5% error on CIFAR10. If anyone knows how it fared on CIFAR10.1, please let me know in the comments.

This clustering of models “on the line,” where benchmark test error predicts out-of-sample performance, is not just a CIFAR10 artifact. It is a robust, well-replicated phenomenon, and we should try to understand it better to make sense of benchmarks and evaluations.

My guess for why the gap between theory and practice is so stark is pretty mundane: it’s that most learning theory tells us nothing about data.

In the construction of attacking the test set from last time, I only needed to reason about the labels. I said nothing about the features at all. If you have a fixed hidden bit string of length n, then with n guesses, I can recover the labels from test error. It’s not hard to verify that you can get closer to the labels by boosting random guesses. As Moritz put it, this is “Competing in a data science contest without reading the data.” You can hill climb without looking at the features. You can hill climb without looking at the training labels!

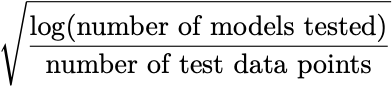

However, this is only part of the story. Sadly, most learning theory bounds are derived without reading the data. The standard Hoeffding bound that argues that the generalization gap will scale no worse than

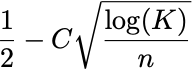

also never looks at the data. If you sample a random predictor, the mean test error is 1/2 and the variance 1/n, where n is the number of test data points. Hence, if you sample K random predictors and don’t boost, your minimum test error will be

Now, let’s say you have a set of functions that can effectively be represented by K archetypical functions. For example, a class with VC dimension d can be represented by about 2^d functions. Plug this into my expression and, lo and behold, you have a learning theory bound. This bound holds regardless of whether the VC class is any good at classifying your data. Our large deviation inequalities only care about the number of things you test, not what you are actually testing.

Learning theory almost never says anything about data. This is because if we knew something about the data, we probably wouldn’t be using nonparametric prediction algorithms. But avoiding thinking about data is problematic. Our thinking derived from learning theory is wrong and misleading. Can we think about new theories that reflect the last four decades of fruitful training on the test set?

It’s wild that this is the only thing you’re not allowed to optimize.

There actually is some interesting work on optimizing the weights at init. Essentially that's transfer learning in a bunch of setups. I was trying to do something like this and had trouble getting it to work, eventually these guys figured out one way to do it: https://openreview.net/pdf/304a7da98b79c8c15e8baa9f038951976a9ef764.pdf

My take is that the developmental program does a lot to optimize the init of our biological networks.

LOL @ No. 9. I still remember this tweet - https://mobile.x.com/beenwrekt/status/913418862191710208 and now that "optimally" is in scarequotes in the blog description, we can probably welcome No. 9 as the sneaky way of doing what you suggested in the footnote. (Early neural networks did in fact directly tune the weights and we have algorithms like NEAT and its cousins)