Evaluation or Valuation

The infinite regress of evaluating large language models

Facebook released a new language model this week, and, despite it doing well on benchmarks, everyone agrees it’s a flop. People determined it spit out overly chatty answers, and its cheerful babbling is why it got good scores on the Chatbot Arena. When they tested it for themselves, they found the model was just up to snuff. How do they know? Here’s how Nathan Lambert put it:

“Sadly, the evaluations for this release aren’t even the central story. The vibes have been off since the beginning…”

My friends, it is time to accept that the only way to evaluate vibe coding is with vibes.

I think we’re finally coming to terms with the fact that we have no idea how to use machine learning benchmarking to evaluate LLMs. In the olden days of 2018, there was a brief stretch of trying to evaluate language models by building classic machine learning benchmarks. To find the “Imagenet for Natural Language Processing,” if you will. Frustratingly, the benchmarks were saturated effectively as soon as they were released. One way of interpreting this rapid saturation was that AI was so good now that there was no benchmark it couldn’t crush. The other way of interpreting this is that these NLP benchmarks suffer from the worst form of Moravec’s paradox, and language logic tasks were far easier to solve by brute force pattern recognition than computational linguists had realized.

Indeed, this seems to be the big research insight of the GPT era, though one that had been staring us in the face for seventy years. Models that better predicted next tokens were better models of language. If you have a model that perfectly recapitulates language, all of your tests, which are built from natural language, are redundant. Perplexity is all you need because the only way we can evaluate language models is with language. If all Language Games are language, then a perfect interpolator is going to maximize all of your benchmarks as soon as you release them.

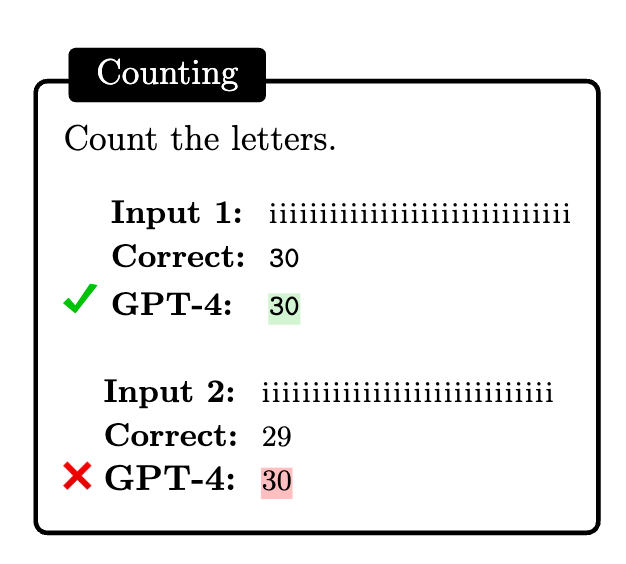

Finding quirks with language model answers can be interesting. In “Embers of Autoregression,” McCoy and coauthors find some fun examples in GPT4, like it not being able to count:

My impression is that the machine learning community has accepted that cherry-picking illustrative examples of semantic or syntactic errors doesn’t help us evaluate language models. However, these quirks do demonstrate that whatever these models are doing, it’s not what we do. That shouldn’t count as a demerit. The fact that these models undeniably produce coherent language and don’t understand semantics or syntax is one of their most amazing features. Everyone agrees that what these models produce is language, even if said language is riddled with syntactic or semantic errors. As my friend Leif Weatherby details in his new book Language Machines, this has taught us a powerful lesson about language. Large language models have revealed that poetics are more central to language than semantics or syntax.1 This means that we need to redo linguistics from scratch. It also means that evaluating LLMs isn’t a question of quantitative analysis. It’s a question of qualitative analysis. Far fewer people like chatbots because they solve math problems than because they serve as virtual therapists.

I don’t think we should be surprised that LLM benchmarks now only function as marketing material. They are a means of arguing for 20 dollars a month. But those twenty dollars are doled out by people in offices trying to maximize productivity in writing emails, reports, and software. We’ve crossed the threshold where it no longer matters who maximizes the Gorilla GLUE suite. It’s about who can get the stickiest user base for their productivity tool.

My impression of the heated social media arguments about the capabilities of these various models is that they are akin to arguments about Google Docs versus Microsoft Word. How do you evaluate “Microsoft Word is better than Google Docs?” You could argue in favor of how Microsoft Word tracks features, how it seems to work better on longer documents, or how it doesn’t run in a web browser (h/t Kevin Baker). How would you evaluate the assertion that “vim is better than emacs?” We’re evaluating products on human factors. You could do bullshit market research and add a quantification if you wanted to. But long-time argmin readers know I think people have way too much faith in those sorts of statistical aggregates of opinion.

Using myself as a case study, I call on several pedestrian uses of LLMs once a day or so. After reading a great set of notes by Gavin Leech, I evaluated how I use LLMs. They are great for generating boilerplate Python code. I use them for summarization in paperwork.2 I use them in place of Google search, but that’s mostly because Google search is a crumbling husk of its former glory.3 These are all useful things. I don’t know which models are better for my use cases. They all feel the same to me. I’ll probably give my money to the company with the least horrible billionaire in charge. Tough choices.

In any event, LLMs exist in a funny space between productivity software and science fiction revolution. However, it does seem like the evaluation of these models is quickly trending closer to the former than the latter. The future of LLM evaluation is likely destined to align with the evaluation of all the other mundane tooling in our lives: LLMs will be evaluated with our wallets.

Leif doesn’t pay me a hefty commission every time I praise his work on here, but maybe I should start charging him. In any event, he’s brilliant.

All academics know this, but the number of times I’m asked to give summaries of my work in reports no one will read is quite astonishing. I have no qualms outsourcing such requests to a magical language machine.

What a massive fuckup by Google.

It seems like OpenAI basically agrees with you! https://www.businessinsider.com/microsoft-openai-put-price-tag-achieving-agi-2024-12

"However, these quirks do demonstrate that whatever these models are doing, it’s not what we do. That shouldn’t count as a demerit. The fact that these models undeniably produce coherent language and don’t understand semantics or syntax is one of their most amazing features. "

LLMs to human language is as submarines to fish, and airplanes to birds.

"I use them in place of Google search, but that’s mostly because Google search is a crumbling husk of its former glory.³ These are all useful things. "

"What a massive fuckup by Google."

While I do not do that, apparently, the Gemini AI summary at the top of searches has reduced the reading of search links. What does Google do now if its main revenue model is being undermined by the AI summary? [ Couldn't happen to nicer crowd of ROT artists.]