What does test set error test?

Poking the bear of construct validity in machine learning.

Two ImageNet replication studies highlight conceptual nuance in machine learning evaluation. Rebecca Roelofs, Ludwig Schmidt, and Vaishaal Shankar created “ImageNetV2,” which aimed to closely replicate the data generating process of the original ImageNet test set. ImageNet images were collected by searching Flickr and then chosen based on inter-annotator agreement that images contained a particular concept class. Becca, Ludwig, and Vaishaal painstakingly worked to match the processes described by the ImageNet team to create a dataset as close to the original Imagenet as possible.

In parallel, a team at MIT collected a data set to be as far from Imagenet as possible. Their “ObjectNet” consisted of 313 ImageNet classes corresponding to household objects. However, the MechanicalTurk workers were tasked with posing these objects in unconventional ways to stress test Imagenet classifiers.

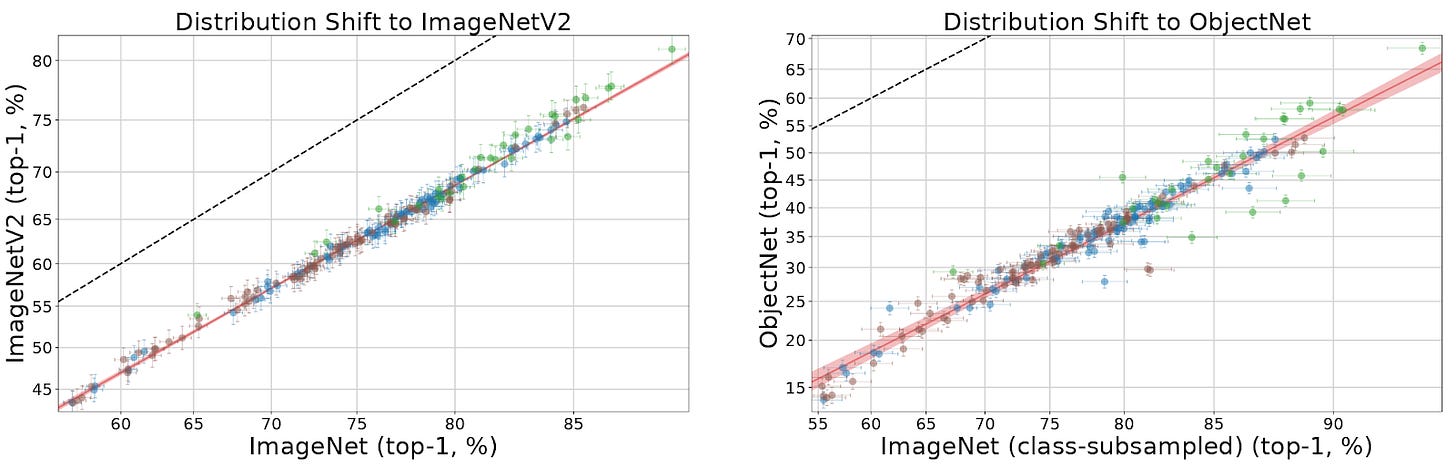

Interestingly enough, the best-performing models on Imagenet were the best-performing models on ImageNetV2 and ObjectNet:

These scatterplots display the accuracy of models scraped from the internet on the various prediction tasks. Each dot represents a single downloaded model. We see that Top-1 accuracy on ImageNet correlates strongly with top-1 accuracy on ImageNetV2 and ObjectNet. Models cluster “on a line” that measures a correlation between datasets. The models performed uniformly worse on ObjectNet but were still pretty good. 50% accuracy when there are 313 classes means something interesting is happening with “transfer learning.” What explains the high degree of correlation? What explains the gap between the two tests? How much correlation should we expect?

These are questions of construct validity. Construct validity was coined by Lee Cronbach and Paul Meehl in 1955 to formalize test assessment in psychology. Cronbach and Meehl emphasize that all we have with testing are correlations. There is a test outcome, there is what we hope to measure, and then there is some correlation between the test score and the measurement. “Do sweaty palms indicate anxiety?” “Do atypical answers on a Rorschach test indicate schizophrenia?” How would you back up your answers to these questions? The goal of construct validity is to make an argument for why a test measures what its proponents say it measures. When test outcomes have high variance, construct validity should explain the factors that account for the variability in the test.

In machine learning, since we lean on being atheoretical, we seldom ask questions about construct validity. For the sake of competitive testing, test error need not mean anything beyond “guessing the labels correctly.” But for low test error to be useful for something, we need to believe that test error correlates with constructs we want to predict and act upon.

ImageNet nicely illustrates the murkiness of constructs in pattern classification. “Top-1 accuracy,” which seems to correlate with many things we care about, is already chasing a different concept than that asked of the MTurk Workers who built the dataset. In Imagenet labeling, workers were shown a panel of images and a single label class and asked to click on the ones that contained this class. For example, they were shown:

Bow: A weapon for shooting arrows, composed of a curved piece of resilient wood with a taut cord to propel the arrow.

They were then asked to click on images that contained a bow. Here are four images that at least one person clicked on:

When machine learning models are evaluated, they don’t get to take the MTurk test. In an evaluation of Top-1 accuracy, a predictor is shown a collection of images and asked to submit a single number between 1 and 1000 for each image. The score is then the frequency of labels that match those held in a hidden database. Certainly, there should be some correlation between this Top-1 task and the MTurk task. How would you articulate why they should be correlated and which constructs tie them together?

When you look more broadly at machine learning tasks, these construct issues abound. Even the Machine Learning 101 examples that seem simple and uncontroversial have challenging construct validity concerns. What does it mean for an email to be spam? What does it mean for an X-ray image to be labeled “cancer?” These are subtle, partially subjective questions! It is remarkable that we can build automated tests that correlate highly with human judgment. But we are left without clear reasons for why we should trust these correlations.

The correlational phenomenon of seeing “models on a line,” as in ImageNetV2 and ObjectNet, has now been robustly, repeatedly replicated. Model performance on test sets seems deeply correlated with model performance on tasks we care about. At this point, the question is, what explains the correlation? Are we predicting what we say we’re predicting? What accounts for our prediction errors? And what ties our labels to the concepts we care about?

Maybe we can say we don’t care. Some might say we should just scrape together as massive and diverse a dataset as we can and train using clever supervision rules to cover all data we might ever see. This seems to create useful products. However, you might be left wanting more. It just seems to me that if we want people to widely adopt machine learning for predictions that affect people, we should understand what exactly we are predicting.

I'm reminded of an incisive argument in Kate Crawford's atlas of AI about classification: What does it mean to classify a person as a certain race? Or to say someone's expression indicates "happiness"? These are loaded categories.

I think I love your blog. By the way what construct is training set accuracy tied to? Clearly not the same as test set accuracy, otherwise we would have no need for a test set.