The Spirit of Radio

When we decided noise was random

In a report from AT&T’s Bell Labs in 1923, H. D. Arnold and Lloyd Espenscheid described a landmark transatlantic telephone call. Speech had first been sent across the Atlantic in 1915, from Arlington, Virginia to the Eiffel Tower. But the connections were intermittent, and only single utterances could be heard. By 1923, technology had improved to the point where, for two hours, communication from London to New York was possible “with as much clearness and uniformity as they would be received over an ordinary wire telephone circuit.”

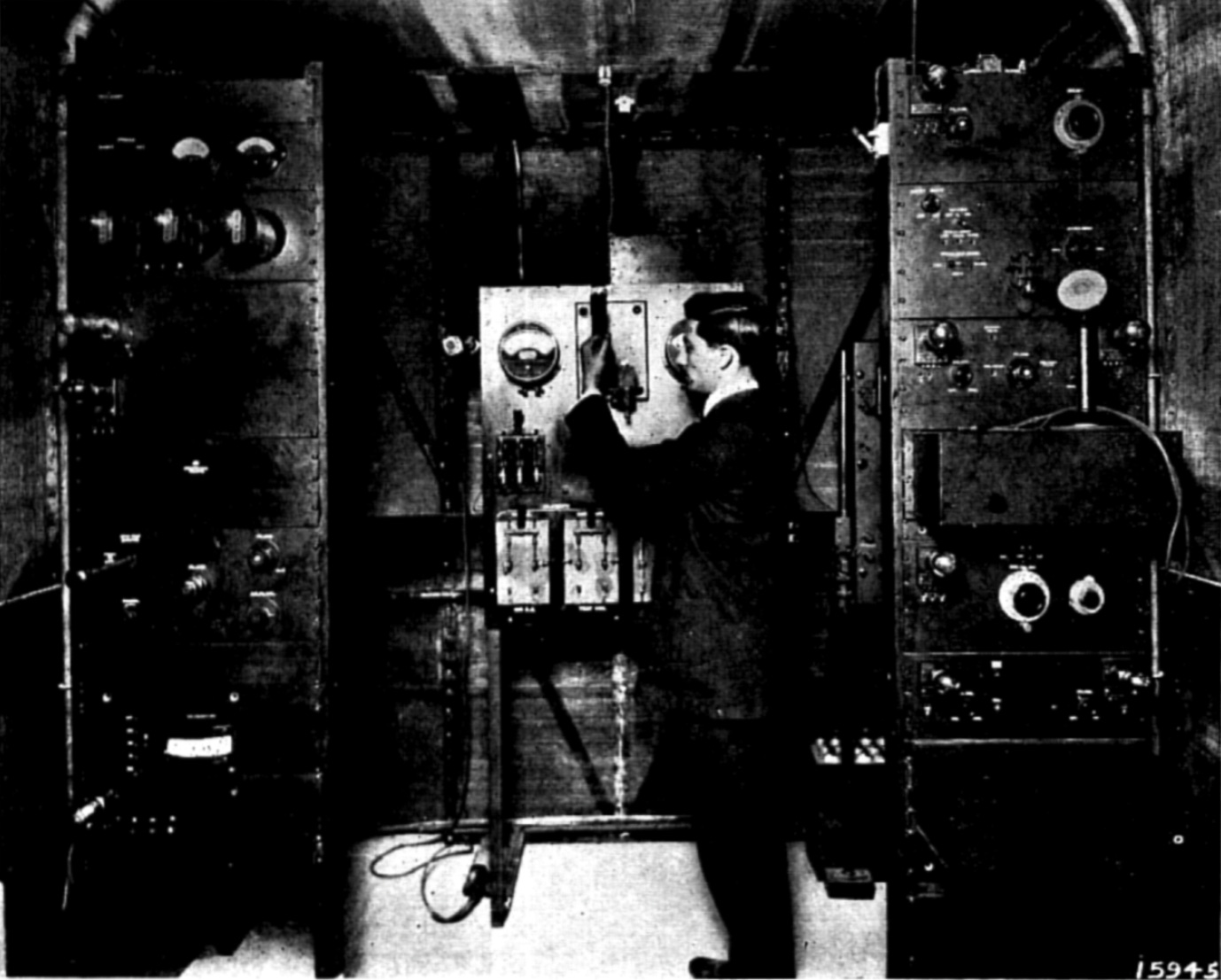

Arnold and Espenscheid spent pages describing their engineering circuits. They designed a 15 kilowatt amplifier using two water-cooled vacuum tubes, each operating at ten thousand volts.

The years of technological improvements and novel power amplification had focused on reducing interference, which Arnold and Espenscheid call noise. Several pages of their report characterize how the strength of both the noise and the transmitted signal varies over the course of a day. Different atmospheric conditions lead to different qualities in transmission.

To illustrate these diurnal variations, they plotted the difference between these signal and noise curves over time. By convention, signal strengths were plotted logarithmically, as sounds are perceived logarithmically. Thus, the plot displays the daily fluctuation of the logarithm of what Arnold and Espenscheid call the signal to noise ratio. And yes, I think they are the first to use this term.

In my pathological search for the origins of contemporary probabilistic reasoning, radio seems to be the first place where engineers treat their received data as random. There was the desired audio, and then there was this undesired signal, which might add clicks, cuts, or hiss over the top. The noise signal was only predictable statistically. Different noise statistics would lead to different timbres of unwanted artifacts.

What gave rise to the noise? Engineers would find many sources. First, atmospheric noise from events like thunderstorms could generate signals that would be received at radio antennae. Second, and more surprisingly, engineers quickly discovered that the electronic devices used in radios would themselves generate noise.

In 1926, again at Bell Labs, John B. Johnson discovered a source of the hiss. He determined that some sort of “statistical fluctuation of electric charge” inside the electronic devices caused a “random variation of potential between the ends of the conductor.”

Let me explain the phenomenon Johnson observed, as it’s totally wild. Take an ordinary resistor and measure the current flowing across it. Ohm’s Law says that the current should equal the voltage drop across the resistor divided by the resistance value of the resistor. You may have seen this in a circuits class as “V=IR.” If the voltage difference between the two leads is zero, Ohm’s Law says you shouldn’t measure any current. But that’s not what happens. Instead, as Johnson observed, you’ll measure a tiny current signal. Johnson found that the resistor noise had a characteristic sound

“When such an amplifier terminates in a telephone receiver, and has a high resistance connected between the grid and filament of the first tube on the input side, the effect is perceived as a steady rustling noise in the receiver, like that produced by the small-shot (Schrot) effect under similar circumstances.”

Johnson also found the amount of noise was constant at every frequency he measured, so there was no sweet spot in the frequency range for sending a carrier signal. Such noise is called white. This noise grew proportionally with the resistor's temperature and resistance.

Harry Nyquist explained what was happening. It’s perhaps odd to think that natural processes are random, but physicists had been using this idea to great effect for almost a century. Even if a process was describable like mathematical clockwork, it was often easier to model the observations as if they were generated by randomly flipping coins. Appealing to the equipartition principle from statistical mechanics, Nyquist derived a simple formula for the noise characteristics that completely agreed with Johnson’s measurements.

Effectively, equipartition arguments say that every signal with these bulk statistical properties is equally likely. We don’t know which from the infinite collection of possible signals with said statistics we’re going to see. But it doesn’t matter which signal is there because all of these signals are perceived the same by the human ear. Similarly, though the particular voltage levels of the noise were completely unpredictable, the average intensity of the noise was easy to characterize. And once this average intensity was characterized, engineers could attempt to mitigate the noise by increasing the intensity of their signals or by cleverly encoding their signals to be detectable.

The 1920s were so interesting for the diverse theories of randomness. Keynes pioneered his view of logical probability. Ramsey soon thereafter countered with his development of subjective probability. Physicists grappled with interpretations of quantum mechanics. John von Neumann discovered mixed strategies in games. Wiener formalized Wiener Processes. Fisher invented maximum likelihood estimation. Neyman and Fisher do pioneering work in randomized experiments. All of this was before the axiomatization of probability by Kolmogorov in the 1930s. I’m going to spend some time on here looking at this period when everyone knew “random” meant very different things to try to understand a bit more about how we decided everything random was the same.

You'll love the title of this paper by Chen-Pang Yeang: "Tubes, randomness, and Brownian motions: or, how engineers learned to start worrying about electronic noise"

https://link.springer.com/article/10.1007/s00407-011-0082-5

I discussed quite a bit of the history in my undergrad course on stochastic systems: https://courses.engr.illinois.edu/ece498mr/sp2017/notes/lec15161718.pdf

Einstein 1905 Brownian motion? Maxwell distribution 1859 ("the first-ever statistical law in physics"), Gibbs, Boltzmann. The mental framework for Johnson-Nyquist was already installed. Interesting post.