Predictions and actions redux

On the complex interactions between uncertainty quantification and recourse.

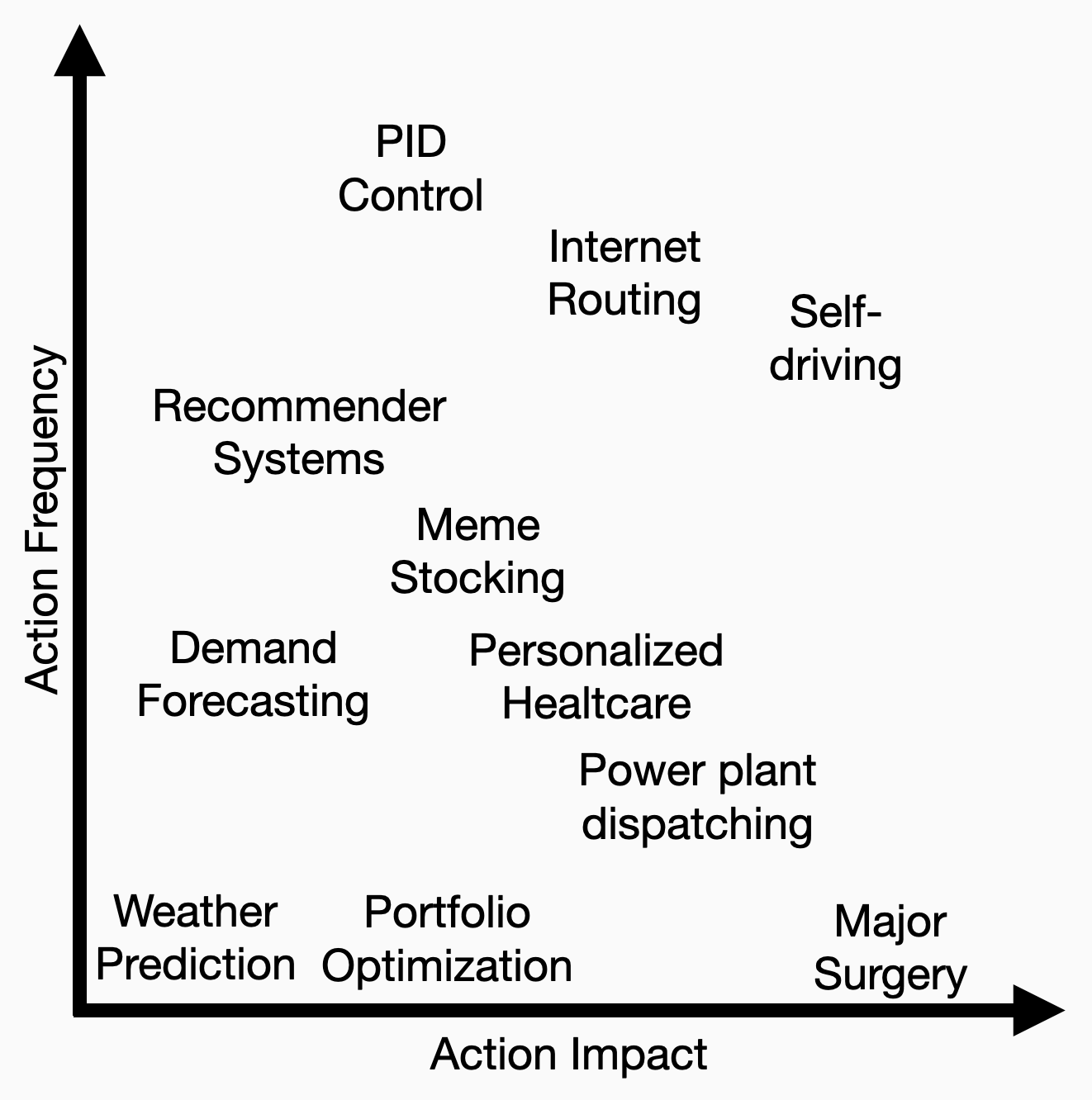

Thanks to everyone who engaged with applications of prediction bands. In my attempt to summarize the responses, I came up with two axes that seemed to span the space: How much does an action affect the outcome? How frequently can you act? The answers to these questions seem to indicate both the value and difficulty of uncertainty quantification in a particular application.

At one extreme, let’s take an example in weather prediction where you can only act once, but your actions have no effect on the outcome (Thanks to Rohan Alur and Sarah Dean for this one). You have a nice outdoor garden that could be damaged by a frost. You want to get prediction bands around overnight temperatures to determine whether or not you should put out a frost protection tarp. Perhaps the residuals on weather prediction are exchangeable so that a nice prediction band can get you a reasonable estimate of the probability of the temperature dipping below zero. Here, you can act exactly once, and the goal is to protect your plants from damage. But whether you tarp or don’t tarp won’t change the weather. This seems like an ideal setting for a marginally guaranteed prediction band.

At another extreme, consider uncertainty quantification in a self-driving car. Shai Shalev-Shwartz uses bands in the context of planning. He estimates the kinematic properties of other drivers and pedestrians and has the autonomous vehicle react to the worst value within the band instead of to the mean. In this case, the car computer makes decisions in milliseconds, updating its predictions and replanning if the uncertainty bands are wrong. The self-driving car can keep acting until it crashes or stops in the middle of traffic (as they like to do in San Francisco). But each action of the car immediately impacts the distribution of future events. The control policy changes the distribution of the car’s state and how other road users react to the car’s behavior. In this case, you need a conditional guarantee on uncertainty quantification, but you can rapidly respond even if your predictions are inaccurate.

These two examples highlight the extremes in decision-making under uncertainty. I’ve made a cartoon plot to highlight some other examples that came up in my online and offline discussions.

Portfolio Optimization. In the most classic version of the problem, you are a small-time trader whose purchases do not affect market prices. You try to put together a collection of stocks to buy. You want good returns but a low probability of losing more than some fixed amount. This last statement is a probabilistic set constraint you might implement with a marginally guaranteed prediction band.

Meme Stocking. Instead of just investing, you get on reddit and brag about your returns. Since it’s a bit too easy for everyone to invest, you induce a giant herd of people to follow your lead (insert rocket emoji). While Matt Levine tells me that this can be a successful way to make a ton of money, you need to be careful with your statistical models as your actions as an investor are changing the future distribution of stock prices.

Demand Forecasting. You are trying to keep your shelves stocked at the Amazon store. You assume that whether or not you have a particular product does not affect consumer demand, as the consumer will go elsewhere. You want to order enough of each product to meet demand but not so much that you must pay for storage. In this case, a probabilistic band is helpful to guarantee you stay in an appropriate range, but you can act with recourse if demand is too high. You can order more tomorrow if you run out today.

Power Dispatching. In this extreme case of supply and demand, you are a prominent power provider and have to decide whether to turn on a big coal power plant to meet the expected demand for the day. You make predictions based on past behavior and weather and only fire up the plant if your cleaner energy systems won’t meet the demand. If you choose incorrectly, you can get power outages, which will undoubtedly influence demand.

Recommender Systems. You want to recommend content to a user base based on their past behavior. You can retrain your predictions daily and build prediction sets around expected interests. Your actions affect user behavior through availability: if you change the app a person interacts with, their behavior changes. Even though the extent of induced behavior changes is hard to quantify, we’re now edging closer to needing conditional guarantees on uncertainty. However, you can adjust recommendations quickly if you sense satisfaction is dropping.

Predictions of personalized treatment decisions. [h/t Manjari Narayan]. A physician would like to understand the likely outcome of some treatment program for their patients. They might have access to prediction bands based on person-specific covariates. Prediction bands here would help identify the patients where doctors are uncertain if a treatment works, in which case they can resort to another treatment. But for many applications here, the physician can revise their treatment plan over time by consulting with a patient.

Major Surgey. Clinical risk scores are designed to predict the risk of a patient having a severe adverse outcome so caregivers can intervene to prevent the outcome. By design, these scores exist so that the action changes the prediction. And in many cases, you can only act once. If you are trying to prevent death from a major coronary event or septic shock, it’s essential to get the answer correct the first time.

This is just a partial list, but it starts to flesh out the complexity of the interaction between forecasting and action. If you have multiple stages of recourse, it almost doesn’t matter if your prediction bands were correct. What matters is whether you can do something when your predictions are wrong. If you can, point predictions coupled with subsequent action are enough to achieve nearly optimal decisions.

The most impressive application highlighting this power of rapid action is the internet. Our internet infrastructure routes an unfathomable amount of traffic at unfathomable rates. At such rates, the computation per packet must be minimal. Estimating probabilities of bad, unexpected events is infeasible. Instead, systems are designed to be ready for when bad events happen. As Scott Shenker says, “The key to scaling is being able to recover from failure.”

I recognize that most of what I wrote today is vague and unclear. I’m working on figuring this out in real-time. That’s part of the fun of blogging. I will try to unpack this more in the next blog, where I’ll dig into conceptual examples from bandits and control that highlight the tension between uncertainty quantification and recourse.

If you use PID to stabilize an unstable system, is that in the top-right corner of the cartoon map? I suppose there are implicit "prediction bands" also with standard PID control in the sense that the gain and phase margins have to be sufficient. The plant has to exist within a certain range. There is no need to do it, but I guess it is possible to visualize a possible range of open loop short time ahead forecasts based on the allowed plant range, from the at-present state. Bit contrived maybe.

Ben, one question regarding the work of Shalev-Shwartz. The tweet you linked is much more concise than what you describe. Do you have a reference for that handy? Thank you.