Power Posing

Meehl's Philosophical Psychology, Lecture 6, part 5.

This post digs into Lecture 6 of Paul Meehl’s course “Philosophical Psychology.” You can watch the video here. Here’s the full table of contents of my blogging through the class.

As Meehl has noted, his ten obfuscators point in opposing directions, which is why they make the literature uninterpretable. For Meehl, insufficient power takes good theories and makes them look bad. The point here is simple: when studies are too small, null results don’t necessarily provide evidence that the theory is false. If you legitimately have 80% power and a theory of high verisimilitude, there’s a 1 in 5 chance you’ll run your experiment and fail to reject the null hypothesis. 1 in 5 seems like a pretty poor bet for a study requiring multiple person-years to execute. Meehl’s point is that if you don’t pay attention to power, you’re shooting yourself in the foot when trying to corroborate your theory.

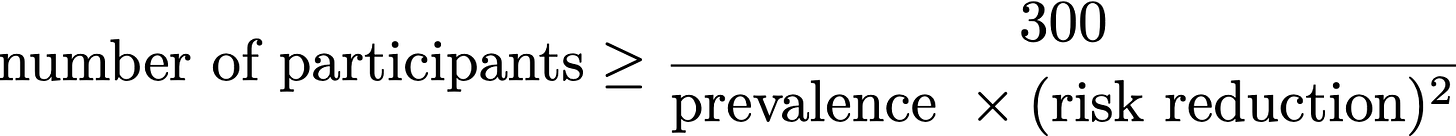

What would it take to get higher power? The answer is always more data. Let me use yesterday’s example of a vaccine study to illustrate how these power calculations change as we ask for more precise studies. To repeat the setup, we have a vaccine that we think prevents a disease. To compute the power, we guess the prevalence (i.e., what percentage of people will catch the disease without the vaccine). We assert a tolerable level of risk reduction (i.e., the percent fewer people who will get the disease if they take the vaccine). If we want to have a power of 80% and a size of 5%, the number of people we need to enroll in the trial is

If the prevalence is one in a hundred and the tolerable risk reduction 50%, then we’ll need to enroll about 13,000 people in the study. That’s already pretty big. But what if we demanded a power of 99.999% and a size of 0.001%? Then we’d need

On the one hand, this is only about a factor of nine times larger. An order of magnitude increase in samples yields a massive increase in statistical precision. On the other hand, for the same levels of prevalence and risk reduction, we’d need about 120,000 participants. Factors of 9 matter a lot when it comes to fundraising for studies.

But people need to do experiments! So they make up some optimistic projections so that the number of participants works out to be exactly the number they think they can feasibly enroll given their staffing and budget constraints. It’s easier to be optimistic about your result when the numerator is 32 rather than 300.

Since we’re all budget-constrained, we end up with a world of underpowered studies. But this is where Meehl’s argument gets confusing. If a study has insufficient power, it will likely yield a null finding even when the experimenter’s theory is true. As we’ve discussed before–and I’ll return to it when unpacking Lecture 7—we don’t tend to publish null results. I’ve argued that not publishing null findings is acceptable practice. That’s not the issue here. My issue is that if power just causes more null findings, we would see fewer publications and no knocks on good theories in the scientific literature.

If most studies were underpowered, we’d see more null results and fewer publications, right? We all know that’s definitely not what’s happening. We see an endless doom-scroll of non-null results. Is low power really then a problem? I’m not sure! I’m probably missing something here.

Now, in the 2000s, John Ioannidis leveled a different flavor of critique against the ubiquity of low power. He proposed a seductive “screening model” for evaluating studies using Bayes’ rule. You plug in the power and size into a Bayesian update, put the prior on theories being true to be low, and voila, most published studies are false. With respect to Dr. Ioannidis, I don’t buy this argument at all. Taking power and size as literal, exhaustive likelihoods is not valid. I may have more to say about Ioannidis’ “positive predictive value” interpretation in future posts. For now, if you want to read more, Mayo and Morey have a detailed critique of this viewpoint.

But for today, I’ll just leave it at this: I agree with Meehl that small studies make it hard to corroborate good theories. We should try to make studies as large as feasibly possible. Fortunately, we’re in the age of big data and can grab huge data sets that never have power issues. This should solve the problem, right? Unfortunately, it solves one problem while creating another. As we’ll see in the next post, too much power can be a bad thing in observational studies. Because everything, everywhere is correlated.

What if all you need to explain the so-called replication crisis in fields like psych is natural heterogeneity when effects are measured repeatedly (due to unmeasured confounders) and misunderstanding of statistical power? If observed effects will vary even when we think we're running the exact same experiment, and replications are also underpowered, you don't necessarily need all the complicated theories about people trying to game things to explain why nothing seems to replicate. It's all just a massive misunderstanding of statistical power.

I think that would be funny. I bet Meehl would too.