Indiscipline

Can convex optimization ever be a technology?

This is the live blog of Lecture 20 of my graduate class “Convex Optimization.” A Table of Contents is here.

Convex optimization promises that if you model your problem using the composition rules of convexity, you can pass your model to one of many solvers that will efficiently solve your problem. The primal-dual interior point methods we discussed last week show that one solver idea does indeed capture all you need to solve all of the problems in the course. The introduction to Boyd and Vandenberghe even promises that these algorithms will need a number of equation solves “almost always in the range between 10 and 100.”

But I wish it was quite so simple. I’m going to make a confession on behalf of optimization researchers. At some point, you will run up against the limits of the solvers and have to get under the hood.

These limits can pop up in the silliest ways. Let me give an egregious example. Consider the following simple constrained least squares problem

Here q is a positive scalar. By staring at the problem, it’s clear that the minimum is attained when x=1/q and z=0. You can verify this using the first-order optimality condition. Any direction that decreases x from this value increases both terms in the cost function. The optimal value of the objective at this point is equal to 1.

Now, what happens when I plug this into cvxpy…?

Huh, it’s telling me the answer should be x=0.5/q and z=0.5/q? The objective value of this point is 2.5, well off from what the real optimal value should be.

What went wrong here? I tried using cvxpy’s “verbose” settings, but they didn’t provide any actionable insights. The solver thought it had solved the problem. OK then.

Initially, I thought I had stumbled on a simple illustration of ill-conditioning in convex optimization. Condition number is still an unavoidable can of worms I’ll open later this week, but the answer turns out to be much less elucidating for this particular two variable problem.

I then stared at the optimality conditions. If we let u be the Lagrange multiplier for the equality constraints and s and t be the Lagrange multipliers for the inequality constraints, then the KKT conditions for this problem are

If we set s and t to 0 and x and z to 0.5/q, then the last three equations are satisfied. If we then assign u=q/2, the first equations are approximately satisfied when q is small: the right-hand sides are equal to -q and q, respectively.

Clearly, they are not equal to zero, but solvers can never solve the KKT conditions exactly. They are working with floating point numbers, after all. All convex solvers check termination conditions, testing whether the KKT conditions are solved to a reasonable amount of precision. “Reasonable amount” is defined by some solver parameter that you can find when you read the manual. Apparently, the OSQP solver is happy with residual error in the KKT conditions of 4e-5. Maybe you would be too.

In cvxpy you can use “advanced features” to access the values of the dual variables. For this problem, it told me that the dual variables s and t were indeed equal to zero, and it also told me that u=q/2. My intuition was right. I figured I could fix this by asking the solver to look for a more precise solution, but here I got stuck. Unfortunately, cvxpy doesn’t provide any hooks to solver precision.

Since it supports so many solvers, supporting access to such solver parameters would be a big ask. Each solver has multiple settings for convergence precision, and not all solvers have the same settings. Some might let you set the absolute error for absolute error in the primal, some relative error in the dual. MOSEK has ~50 solver parameters and countless other solver flags. And for all I know, solvers might measure constraint violations differently. It’s not clear at all what cvxpy could do to have a “disciplined” interface to solver precision.

However, the promise of Disciplined Convex Programming is that you won’t have to do that sort of solver tweaking. Unfortunately, my very simple problem here shows how solver tweaking is unavoidable.

Now, you of course will say, “Set q=1 and there’s no problem.” That is correct, but shows the hidden burden convex programming places on the modeler. Sometimes, problem parameters like q get set by other code in a more complex pipeline. Loosely specified solver precision now adds one more headache to your modeling. You have to ensure that no variables get too small with respect to other variables in your code. The annoying part is it’s hard to see which ones in advance.

And frankly, 1e-5 is not that small! Imagine if the equality constraint was representing the dollar value of some portfolio and the cost was targeting some fractional allocation. That could easily result in a problem like the one I’m describing. We just have to be wary that, even 20 years after the publication of this book, convex optimization is not yet a foolproof technology.

To make matters even worse, here’s worse pathological behavior in nearby problems.

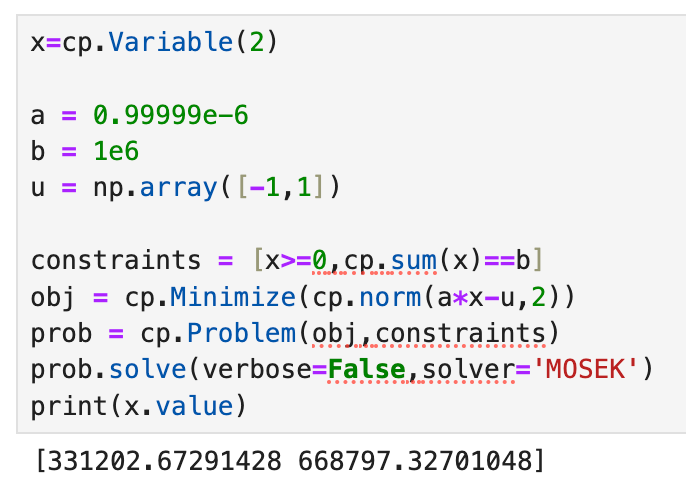

I can reformulate this two variable quadratic program as a second-order cone program. With a value of q 25 times smaller than the one I used above, the solver MOSEK gives me the correct answer:

But if I change one of the parameters a tiny tiny bit, I get the wrong answer:

Come on, man.

You can specify solver precision in cvxpy. For your example if you run prob.solve(verbose=True, eps_abs=1e-7, eps_rel=1e-7) OSQP gets the correct answer. By default OSQP solves to tolerances of 1e-4.

You could also call Clarabel and it will solve the problem correctly since its default tolerances are 1e-8. Seems like the issue is that by default OSQP uses tolerances that are extraordinarily loose compared to any IPMs. It also looks like SCS (also a first order solver) by default uses extremely loose tolerances (1e-5) and gets the wrong answer.

Another take away is that you should by default use IPMs which are able to solve to high accuracy.

> Loosely specified solver precision now adds one more headache to your

> modeling. You have to ensure that no variables get too small with respect to

> other variables in your code. The annoying part is it’s hard to see which ones

> in advance.

Out of curiosity, would you label this behavior as hindering reproducibility or

replicability (or both, or neither)?