Hidden Foundations

We hide a lot of theory in our representation of data.

Machine learning algorithms consume data as abstract mathematical vectors. Though the trend over the past decade has been to avoid too much data pre-processing and have the complex neural networks “learn” features, all of these networks rely on the impressive technology that digitally renders reality into boxes of numbers. How exactly do we decide how to represent a snapshot of the world as a vector? This seems to be where all the action is!

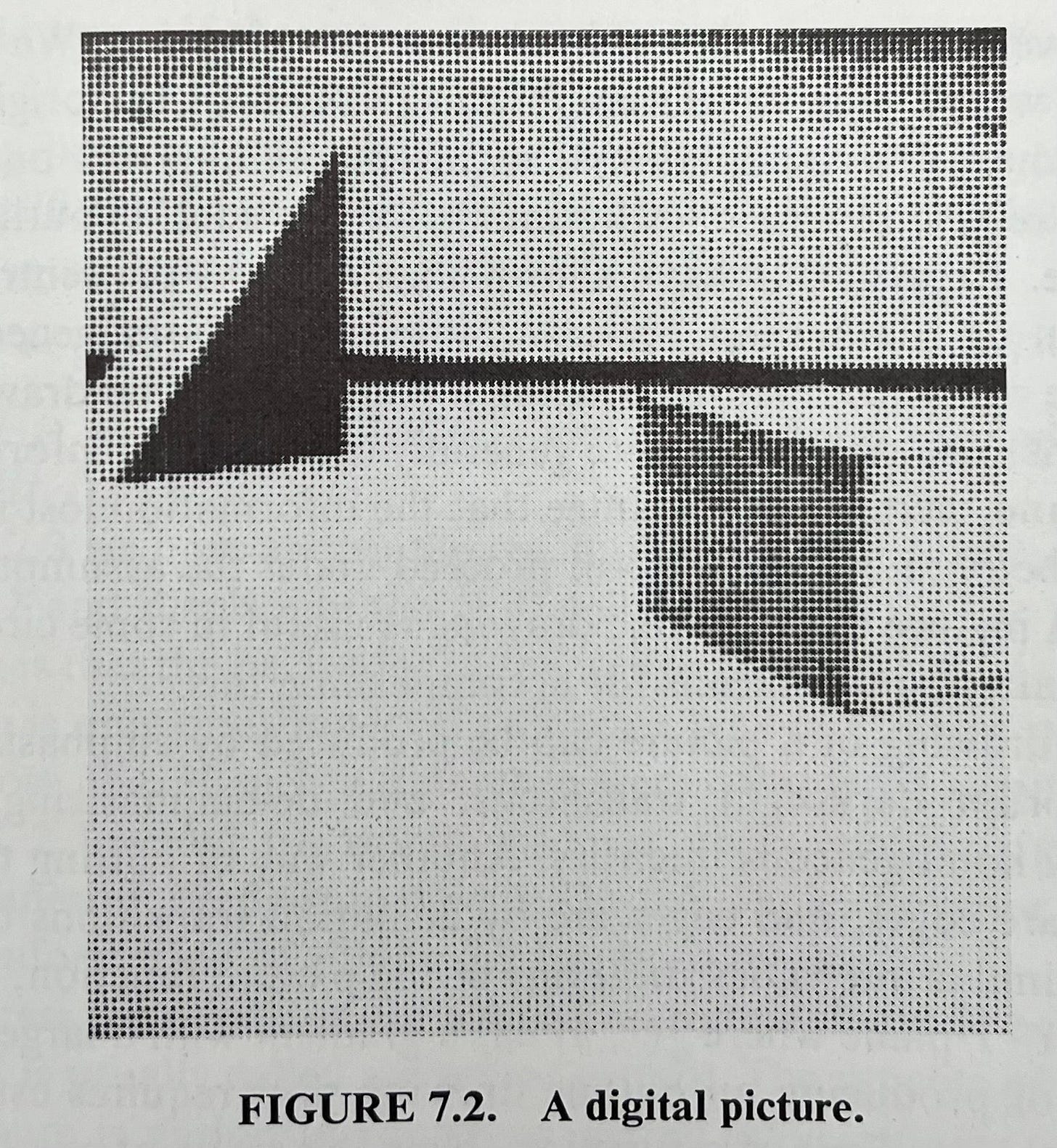

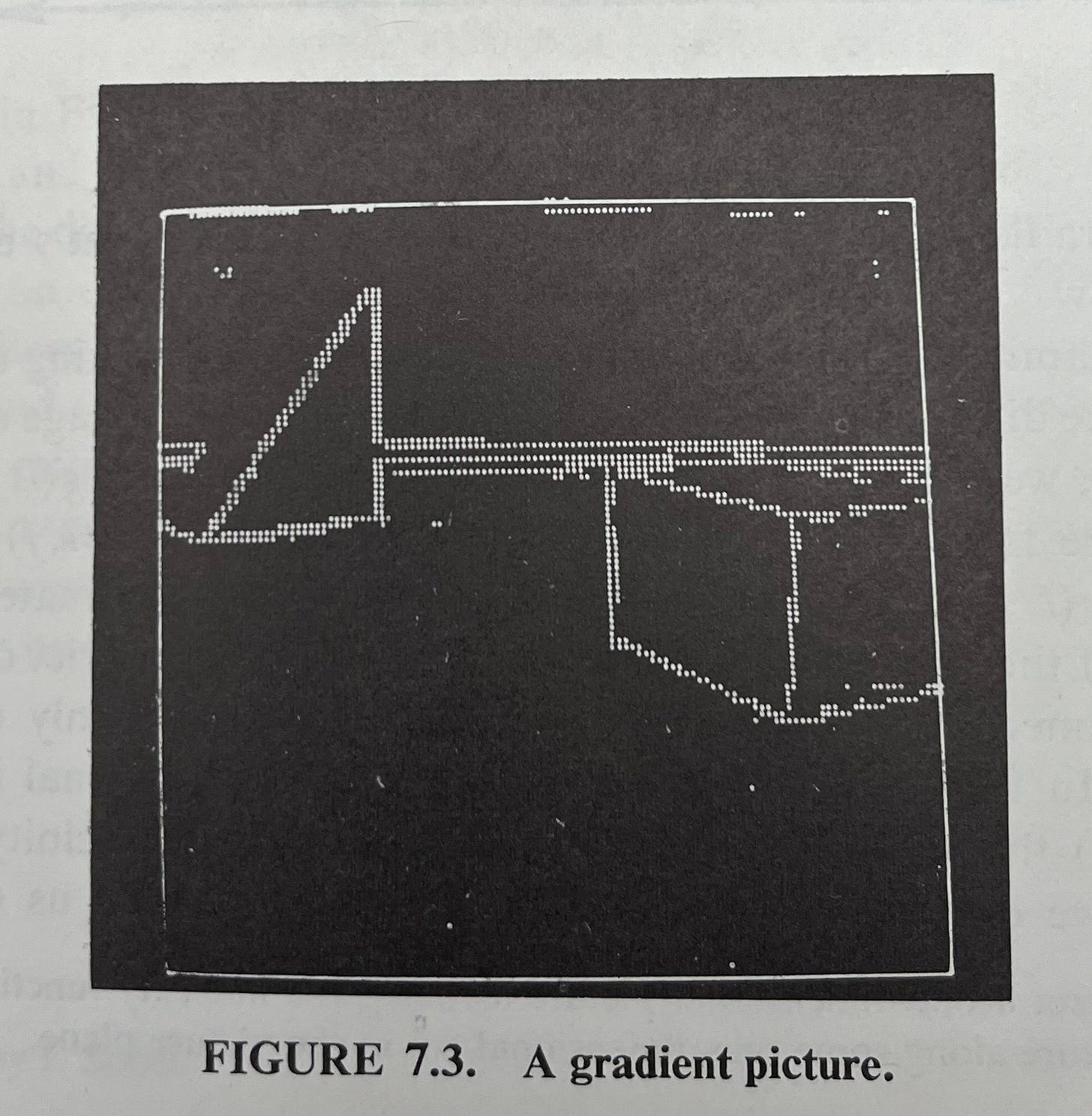

Let’s focus on images. It seems reasonable to think that an image is a function of two-dimensional space. The function value at any point is a brightness. If we want to be fancier, we can have the function have three outputs: brightness, saturation, and hue. Now, I don’t have any good ideas for some analytic form for the function from space to brightness. So we’ll need a very general representation of these image functions on a computer. The most general would be a discrete lookup table. That is, we’ll represent the image as a numerical array on disk. To build that array, we can use guidance from sampling theory about how to spatially filter the image to avoid aliasing and artifacts.

Once we have the array, maybe we’d like to compress it into some more useful shapes. Perhaps we should only use edges because these capture the salient parts of the scene. We can compute edges by finite differencing. From the new image of edges, maybe we’d like to find higher-level structures that could potentially differentiate scenes. So we can build small template images and create a new array that measures the dot product between each template and that region in the original image. These templates might be for shapes like triangles or for patterns of faces. Then we could find a hierarchy between these template matchers and end up with a succinct scene summary.

This last paragraph is now out of fashion. It described computer vision in the aughts, but has gone out of favor for neural net approaches. Even so, I think it captures the motivation for neural architectures for images. These basic ideas of stacking convolution operators on top of well-sampled images certainly remain. We rely on images having rich spatial frequency information and are inspired by the convolution theorem from Fourier Analysis. The notion that we can represent images as multi-dimensional arrays of spatial frequency content… I mean, isn’t that the motivation behind the Vision Transformer?

I’m not sure we should be surprised by this anymore, but all I have described so far is in Duda and Hart. (Again, folks, this is from 1973!!!). Their book is titled “Pattern Classification and Scene Analysis.” We’ve talked about how the parts on Pattern Classification remain relevant. But the Scene Analysis section of the book is full of insights still used in machine learning too. This blog summarizes chapters 7 and 8 of their book. Here are their figures mapping an analog image to a digital image to edges.

Though Duda and Hart focused on images, the same ideas apply to audio processing for speech. There will be different patterns and templates to segment, but audio is well represented by a sequence of temporal frequency content. And to the chagrin of Natural Language Processing researchers, the biggest surprise of the deep learning era is that text is also best represented as sequences of template-matched vectors.

Beyond spatiotemporal sequence data, we need other forms of prior knowledge to generate features for machine learning. How does a human get rendered as an electronic health record? And how is this collection of billing codes and random notes a useful representation for effectively predicting healthcare outcomes? Fourier analysis probably won’t help here. But domain knowledge is critical to understanding what to do with this data and how to interpret any predictions gleaned from it. This might not be mathematical theory, but some theory is needed. It’s not the case that just getting bigger data sets is all that matters. You must know what the data signifies and whether it signifies what you want.

The features we use in machine learning are anything but arbitrary. And here is where I end the week with a robust defense of theory. Machine learning’s most impressive demos rest on centuries of signal processing. They require an understanding of how images are formed and what they represent. They require theory of how sound is generated and perceived. They require foundations of Fourier analysis, sampling theory, and projective geometry. To further innovate in machine learning, we need to know centuries of theory so that we can forget it. But we’d be in trouble if we decided to stop teaching these fundamentals.

Preach, brother!

Also, look at the front portion of Lana’s computer vision syllabus: http://slazebni.cs.illinois.edu/fall22/

Your argument seems transferable to preference learning models (which use permutation statistics). Also, two books that maybe relevant here (both from psychometrics)

+ Foundations of Measurement by Luce, Suppes and others

+ A Theory of Data by Clyde Coombs (from the 60s and from the Michigan school)